FP16 is not supported on most CPUs due to their architecture prioritizing precision over speed. As a result, CPUs default to FP32, which offers higher accuracy but slower performance. FP32 remains the standard for general-purpose computing tasks on CPUs.

“Want to speed up your CPU-based computations? Discover why FP16 might not be the magic bullet and explore the FP32 alternative.”

In this article we discuss about “FP16 Is Not Supported on CPU Using FP32 Instead”.

Table of Contents

Introduction

In recent years, there has been a growing demand for faster and more efficient computing processes, especially with the rise of AI and machine learning. One term that often comes up in these discussions is FP16—a floating-point format that’s making waves in the GPU world. However, a common issue that users encounter is FP16 is not supported on CPU using FP32 instead. But what does this mean? Why does FP16 matter, and why can’t CPUs keep up with this emerging technology? Let’s break it down.

What is FP16?

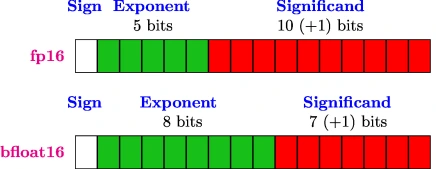

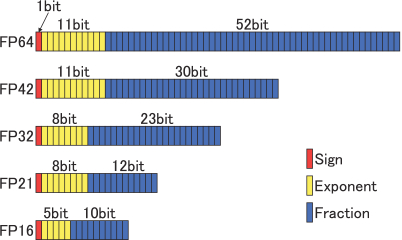

FP16 stands for “half-precision floating point,” which is a way of representing numbers in computer calculations. Unlike its counterpart, FP32, FP16 uses only 16 bits, allowing for faster computations and less memory usage. In tasks like deep learning and neural networks, where speed and efficiency are critical, FP16 is an ideal choice because it can handle large volumes of data with fewer resources.

What is FP32?

On the flip side, FP32 is the “single-precision floating point” format that has been the standard for most CPUs. FP32 uses 32 bits, providing more precision than FP16, but at the cost of more memory usage and slower computational speeds. This makes it reliable for a wide range of computing tasks, especially those requiring high levels of accuracy.

Read Most Important: Why Does My CPU Keep Spiking – Detailed On Cases Solutions!

Why FP16 is Not Supported on CPUs

One of the key reasons FP16 is not supported on CPU using FP32 instead is due to the architecture of traditional CPUs. CPUs are designed for general-purpose computing and typically prioritize accuracy and compatibility over speed. Since FP32 offers more precision, it’s the default standard on most processors. FP16, while faster, sacrifices precision, which can cause issues in certain applications where detailed calculations are essential.

FP32: The Default for CPUs

Because of their design, CPUs are more comfortable sticking with FP32. While FP16 is not supported on CPU using FP32 instead, it’s important to note that FP32 still performs well in a wide variety of applications. From scientific computations to gaming and everyday tasks, FP32 provides the right balance of speed and precision for many users.

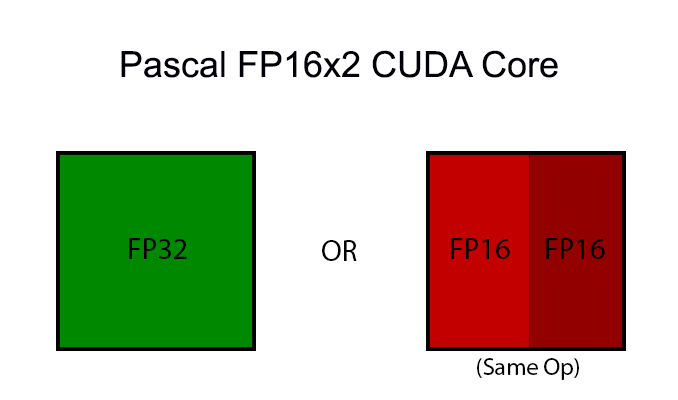

FP16 in GPUs vs. CPUs

Unlike CPUs, GPUs (Graphics Processing Units) thrive on parallel processing, which makes them better suited for FP16 operations. In fields like AI and machine learning, where vast datasets are processed at incredible speeds, FP16 shines. The ability to crunch numbers faster and use less memory gives GPUs a significant advantage in tasks that don’t require the high precision of FP32.

Why FP32 is Used Instead of FP16 on CPUs

The primary reason FP16 is not supported on CPU using FP32 instead lies in the nature of these tasks. CPUs are tasked with handling a broader array of operations, where precision is more important than speed. FP32’s extra bits allow for more accurate computations, which is critical in many scenarios, such as financial modeling or scientific research.

Performance Differences Between FP16 and FP32

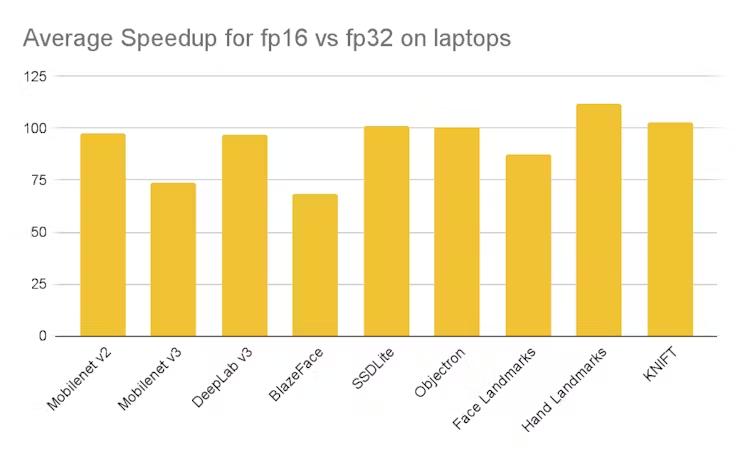

When you compare FP16 and FP32, the differences in performance become clear. FP16 is faster in scenarios where precision isn’t as critical, such as in image processing or some AI tasks. However, FP32 is necessary for applications that demand a higher degree of accuracy. This is why you’ll find FP16 is not supported on CPU using FP32 instead in many high-precision use cases.

Read Most Important: What Percentage Isopropyl Alcohol To Clean CPU – Guide 2024!

Applications Best Suited for FP16

FP16 excels in applications where speed is essential and the need for precision is secondary. Examples include:

- AI and deep learning

- Image and video processing

- Neural networks In these fields, the ability to handle large datasets quickly can outweigh the slight loss of precision that comes with FP16.

Challenges of Using FP16 on CPUs

One of the biggest challenges of using FP16 on CPUs is the loss of precision. While FP16 is not supported on CPU using FP32 instead, the main issue is that CPUs would struggle to maintain accuracy with the lower bit count of FP16. This can lead to errors in calculations and a lack of reliability in certain applications.

The Future of FP16 Support in CPUs

With advancements in technology, there’s a growing push for CPUs to support FP16. As AI and machine learning become more integral to modern computing, future CPUs may start incorporating better support for FP16 operations. However, for now, FP16 is not supported on CPU using FP32 instead, and this is unlikely to change in the short term.

FP16 in Neural Networks

In neural networks, the ability to process data quickly is crucial. FP16 offers a speed boost that can significantly improve performance in training models and making predictions. While FP16 is not supported on CPU using FP32 instead, GPUs continue to dominate in this space, thanks to their superior handling of FP16 calculations.

Read Most Important: How Many Cores In A CPU – Ultimate Guide In 2024!

How to Handle FP32 When FP16 Isn’t Supported

When FP16 is not supported on CPU using FP32 instead, developers can still optimize their code to maximize performance. Various libraries and tools, like TensorFlow and PyTorch, offer ways to improve FP32 performance and ensure that the lack of FP16 support doesn’t slow down your workflow.

Will FP16 Eventually Replace FP32?

FP16’s rapid adoption in certain sectors suggests that it could become more prevalent in the future. However, FP32’s precision is still a necessity in many areas, meaning that a complete shift to FP16 is unlikely anytime soon. For now, FP16 is not supported on CPU using FP32 instead, but it remains an exciting area of development.

FP16 is not supported on CPU; using FP32 instead Mac

On Mac systems, FP16 is not supported by the CPU due to its architecture. CPUs generally rely on FP32 for computations, as it offers more precision, making it the default standard for many applications.

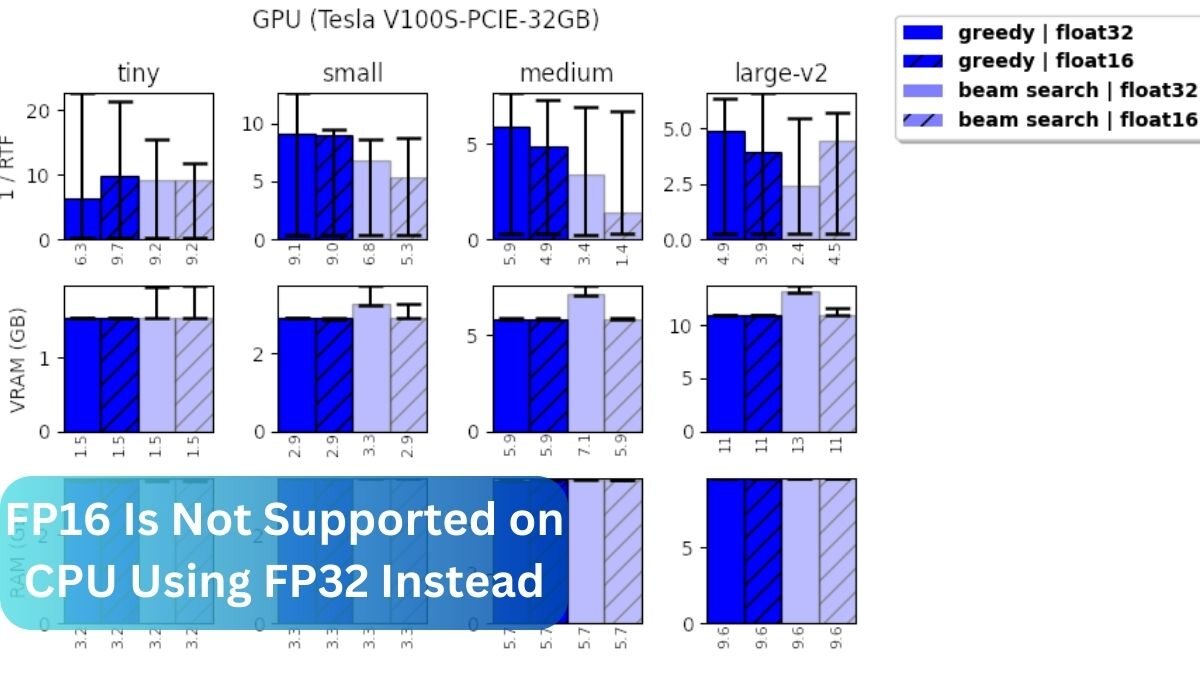

Whisper FP16 vs FP32

Whisper can use FP16 for faster processing with less memory consumption, especially on GPUs. However, FP32 is more precise and is generally preferred when working on CPUs or in cases where accuracy is a priority over speed.

Whisper not using GPU

If Whisper is not utilizing the GPU, it might be due to incorrect setup or missing dependencies, causing it to default to CPU processing. Ensuring GPU drivers are installed and correctly configured should resolve this issue.

FP16 on CPU

FP16 is not widely supported on most CPUs because CPUs prioritize precision over speed. They default to FP32, which provides more accurate results, but at a slower pace compared to FP16.

Whisper transcribe FP16 False

Setting fp16=False in Whisper means it will use FP32 instead of FP16, prioritizing precision over speed, which is especially useful on systems where FP16 is not supported or when accuracy is critical.

Whisper FileNotFoundError: [WinError 2] The system cannot find the file specified

This error typically occurs when Whisper cannot locate a required file. Double-check the file path and ensure that all dependencies are installed correctly to resolve this issue.

FP16 vs FP32 vs FP64

FP16 uses 16 bits and is faster but less precise. FP32, using 32 bits, provides more precision at a moderate speed. FP64, with 64 bits, offers the highest precision but is slower and uses more memory, making it suitable for scientific computations.

RuntimeError: slow_conv2d_cpu not implemented for ‘Half’

This error occurs because certain operations, such as conv2d, are not supported for half-precision (FP16) on the CPU. The solution is to either use FP32 or switch to a GPU that supports FP16 operations.

Read Most Important: how to determne a pnoz multi cpu ip address – A complete guide!

FAQs

What are the key differences between FP16 and FP32?

FP16 uses 16 bits, while FP32 uses 32 bits, resulting in a trade-off between speed (FP16) and precision (FP32).

Is there any way to enable FP16 on CPUs?

At present, no mainstream CPUs support FP16 natively, though some future designs may include this capability.

How does FP16 benefit AI workloads?

FP16 allows for faster computations and lower memory usage, making it ideal for processing large datasets in AI models.

Are CPUs falling behind in modern computing because of FP16 limitations?

CPUs still excel in tasks requiring precision, but in AI and machine learning, GPUs with FP16 support are ahead in terms of speed.

What are the advantages of FP32?

FP32 provides more precision and accuracy, making it essential for applications like scientific computing and financial modeling.

Conclusion

While FP16 offers significant performance advantages, its lack of support on most CPUs limits its widespread adoption. For now, FP32 remains the standard for general-purpose computing. However, as technology advances, we may see greater FP16 support on CPUs in the future, potentially bridging the gap between speed and precision.

Read Most Important: